Technical SEO can be one of the highest-leverage areas of SEO to focus on, but sometimes it can be a total distraction. The distinction is understanding when it’s a high-priority project to work on for your business.

You know they’re there – lurking technical SEO problems on your site that you want to squash like a bug. Where are they though? Which are the most important? Where do you start?

The target of this guide is for marketers and site owners who are somewhat already familiar with what SEO is, but need clarity on what to focus on.

There are hundreds of items to go through when doing an SEO audit or planning for the next 6 months.

But there are a few high-level items that can really mess with your site. I’ve outlined some of the top items here.

I chose these because they are both crucial to the health of your site, and also quick to diagnose. The complexity of fixing them is another issue – that depends on the size of your site and the depth of the problem.

This is not a guide to fix all of your problems, just a starting point.

Nevertheless, here is a checklist of all the items you should check first before moving on to the rest of your site. Some of these may be an emergency.

The 22-Minute Technical SEO Top 10 Quick Priority List

Let’s do a quick run-through of the top 10. After you get good at checking these, you could in theory run through this in 22 minutes flat, once a week.

1. Robots.txt – if there’s one single character that can do more to destroy your online traffic than an error on the robots.txt file, I’d love to hear about it. (1-minute check)

2. Google “site:” Operator – it’s a simple command that can instantly tell you a lot about the health of your site in organic search. (1-minute check)

3. XML Sitemap – often forgotten, sometimes illuminating, the XML sitemap gives direction to search engines on how to view your pages. (1-minute check)

4. Meta Robots – worth checking if you don’t know what it is, can be causing organic traffic issues on individual pages. (2-minute check)

5. Analytics Tracking Code – we all get busy, some don’t check their Google analytics for days, only to find this horror. (2-minute check)

6. Search Console > Crawl Errors – the quickest way to find high-value pages on your site you need to fix ASAP. (2-minute check)

7. Search Console > Manual Penalties – most don’t have these, but it’s worth checking on so you can sleep at night. (2-minute check)

8. Broken Internal Links – bad for user experience, but fairly easy to fix – definitely check these out. (5-minute check)

9. Linking Root Domains – use one of a few main tools to quickly find if you have enough backlinks to beat your competitors. (3-minute check)

10. Ahrefs or SEMrush Value – a quick number to tell if organic search is working for you or not. (3-minute check)

1) Robots.txt – The Lurking Assasin

Robots.txt is like a skunk – it’s a harmless enough animal that’s actually kind of cute, until it attacks.

It’s a very simple text file found on most any site in the world at your-domain-name.com/robots.txt

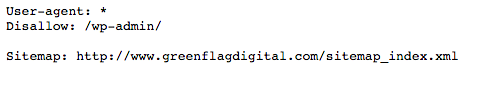

When properly implemented, it looks like this:

When improperly implemented, it contains the line “Disallow: /” instead of what you see above. If that’s the case, you’re essentially preventing all search engines from crawling your website. DISASTROUS!

Action items:

Go check your website’s robots.txt file

- Does it exist?

- Be sure that it doesn’t include the “Disallow: /” line – because that will block crawlers from crawling the entire site.

- Check other folders that are blocked – are those correct in being blocked?

- Review any other directives and use Robots.txt testing tools to see the impact, such as this one from TechnicalSEO.com

Bonus: the Robot.txt file should list your XML sitemap or sitemap index to give search crawlers more direction and crawlability options.

Further Reading

2) Google “site:” Operator – Know & Validate Your Page Count

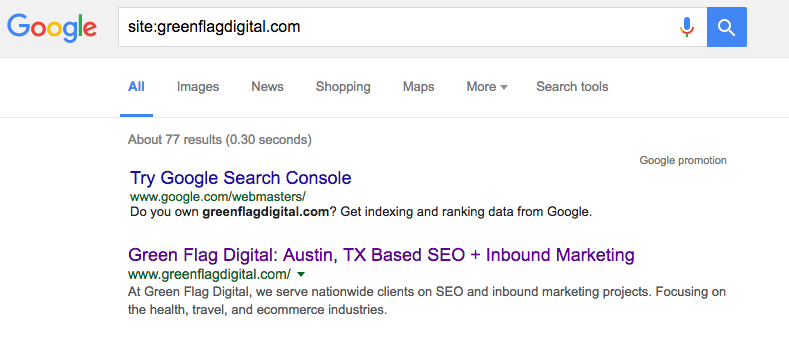

You can quickly assess if your website pages are actually indexing in Google search with this search operator. If you have 0 pages, you have a real problem. If you have too many or too little, that is also a problem to solve.

All you need to do is type in “site:yourdomain.com”, replacing yourdomain.com with your actual website name.

Action items:

Type site:yourdomain.com into Google search

- How many pages are there? Is that more or less than you expect?

- Do you see strange subdomains such as “dev” or “staging”?

- Do you see spam or black-hat SEO pages in the results?

- Compare this number with what you see in Google Search Console to validate data integrity and highlight any other missing pages.

Further Reading

3) XML Sitemaps – Your Clear Index for Google

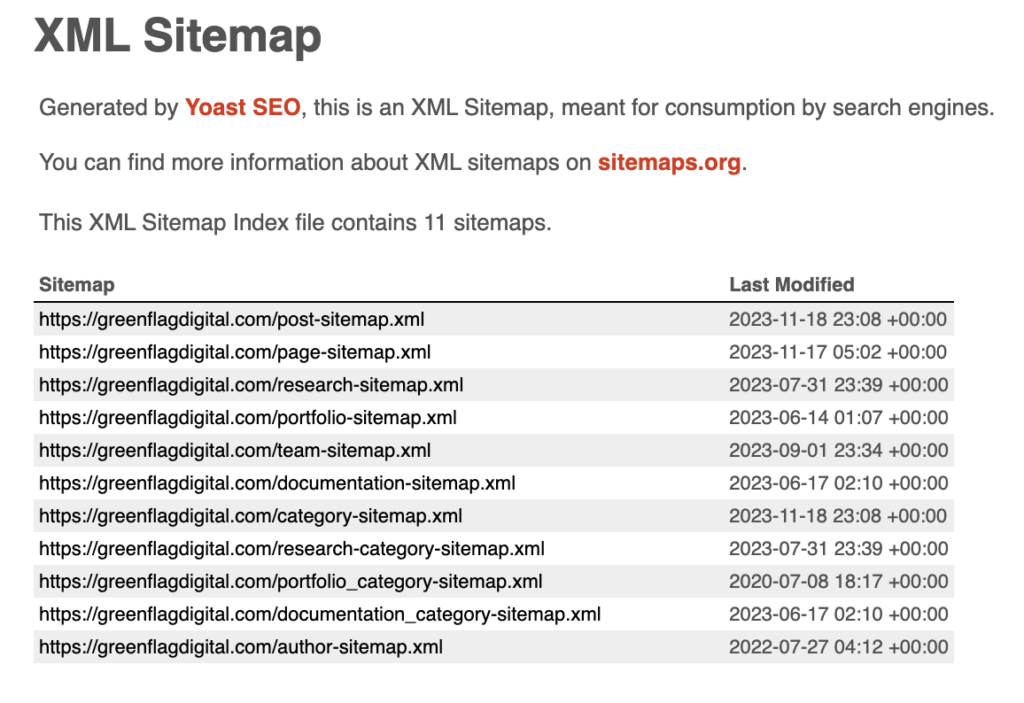

XML sitemaps list out all the important pages on your website that you want Google to crawl, as well as last updated information.

For those with great external linking domains and good internal linking, an XML sitemap isn’t the most necessary component. But as sites get bigger and more complex, an XML sitemap is useful. Even better, if you have over 50,000 URLs you’ll need to split into multiple sitemaps, and that’s where a sitemap index comes into play.

Even if you’re not managing tens of thousands of URLs, it makes sense to split up different sitemaps for images, videos, and other media as well as for different types of posts and pages. This way you can better diagnose issues affecting different subfolders and directories.

Action items:

- Be sure that your XML sitemap is listed in your robots.txt

- Crawl the XML sitemap with a crawler like Screaming Frog to ensure all the pages listed in the XML sitemap are not 404s.

- Check Google Search Console’s reporting of sitemaps and indexation of pages listed on it, as well as other errors.

Further Reading

4) Meta Robots – Powerful Enough to Keep you out of the SERPs

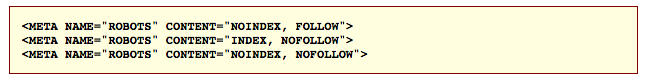

The meta robots tag is very powerful and can keep you completely out of the search results. That’s bad. There are many ways to use the meta robots tag, but the most common is by adding in one of these commands: index, noindex, follow, or nofollow like so:

Most commonly, you would noindex your pages if they are on a development subdomain that you are using for testing. There are rare cases when you would use noindex on your live pages, but generally you would not.

By default, if the tag is missing, your pages will be indexed and followed.

Action items:

Go check the source code of your home page

- Is the meta robots tag present?

- If so, does it show index, follow ?

- Do you have a development version of your site that should be noindexed?

Further Reading

5) Analytics Tracking Code – Can be Gone in an Instant

Raise your hand if you’ve relaunched a website and a day or two later you check your analytics reporting and your traffic has plummeted. Your heart may sink until you realize the tracking code was simply missing during that period.

What happens more than we like though is often after a website redesign is this tag is forgotten. I can guarantee you that your developers are NOT monitoring Google Analytics as much as you are – so you’re the one to check this.

There are many tools to quickly test this – my favorite being GA Checker to get an instant look, although for thousands of pages you’ll need a more powerful tool.

Action items:

Go check the source code of your home page

- Check your organic search traffic trends in Google Analytics

- Use GAchecker.com to test all pages on your site

Further Reading

- Google’s Analytics Tracking Guide

- Recovering Your Organic Search Traffic from a Web Migration Gone Wrong

6) Search Console Crawl Errors – Google is Transparent for Once 🙂

You’re not going to find a better tool to detect crawl errors on your site than the Google Search Console (formerly Webmaster Tools). In the Crawl Errors section of the console you’ll find your 404, soft 404, and server errors that are causing issues for your site.

Google even goes as far as to prioritize these errors by the number of links pointing to them from your site and others.

You should review and fix the most important crawl errors. If you’re seeing large scale problems, you need to get to the root of the cause. If you’re seeing individual 404 pages, you should 301 redirect them if they have external links or traffic, but if they are unimportant you should let them 404.

Google’s official message on 404’s and SEO: “Generally, 404s don’t harm your site’s performance in search, but you can use them to help improve the user experience. “

Action items:

- Log into Google Search Console and check the Crawl Errors tab

- Review the 404 errors and fix or redirect the most important pages

- Review othere error types such as server errors and soft 404s

Further Reading

- Do 404 Errors Hurt SEO?

- How to Fix Crawl Errors in Google Webmaster Tools

- Crawl Errors Report Help (Google)

7) Search Console Manual Actions – the Worst of the Worst

Without a doubt, one of the gravest SEO problems is a manual penalty from Google. This means that a manual action was performed by the Google team. It’s simple to detect, but fixing it can mean a world of pain.

If neither you nor your SEO team have ever performed black hat SEO, you’ll be in the clear 99% of the time. There is the rare case where negative SEO can hurt you, but as Google employees are individually performing a manual action, they have to be pretty certain you are deserving of a manual penalty.

Action items:

- Log into Google Search Console and check the Search Traffic > Manual Actions tab

- If there is nothing, it will state “No manual webspam actions found”.

- If you do have a penalty applied, you should immediately get to work fixing the problem.

Further Reading

- Manual Actions Report (Google)

- How to Recover From Any Google Penalty

- Google Manual Action Penalties & How to Recover

8) Broken Internal Links – Frustrating Humans and Crawlers Since 1994

Broken internal links are bad for both site users and search engine crawlers alike. If the user hits too many broken links on your site they’ll bounce and find somewhere more high quality.

Bots will by stopped in their tracks if your internal links are broken and be sent to a 404 page. Both are bad.

If your site is small enough, you can manually test the links on your site, and you’ll probably find poor grammatical errors as well. For most of us though, this is as painful as pulling teeth. Thankfully there are lots of great tools that can make this easier.

Action items:

Use on of the following tools to crawl your site for broken internal links:

- Screaming Frog SEO Spider (free & paid)

- Check My Links or Broken Link Checker Chrome plugins (free)

- Ahrefs (paid)

- Use on of the above tools to scan your site for broken links

- Update or replace the broken links. Have an intern or outsourced team do this for mass quantities.

- 301 redirect any old links to new pages, if applicable

Further Reading

- How to Find and Fix Broken Links on Your Website

- How to Get More Search Engine Traffic with Internal Linking

9) Linking Root Domains – A Yardstick for Competitors

There are hundreds, if not thousands of search engine ranking factors. The number of linking root domains is one of the most important, however.

Linking root domains means the number of different websites linking back to your website. If you and your competitor both have 20 links pointing your respective sites, the differentiator is the number of different and authoritative sites. If you have 10 links from one site, and the rest from 10 different sites, but your competitor has 20 links from 20 different sites, they win.

You can quickly compare the number of linking root domains using some backlink checker tools. You should use more than one when possible so you have greater accuracy.

Action items:

Use on of the following tools to check the number of linking root domains (LRD):

- Moz Open Site Explorer (free & paid)

- Majestic (free & paid)

- Ahrefs (paid)

- Use on of the above tools to get the LRD counts.

- Compare the counts between your site and competitors

- Use this to reverse engineer opportunities and threats for your link building strategy

Further Reading

10) Ahrefs & SEMrush Traffic Cost – Show Me the Money

Wouldn’t you love to know if your competitor is earning big bucks from organic search?

When you just want to see the money, there’s no better tools to use than Ahrefs or SEMrush. You can rapidly plug in different domains and see the approximate monetary value they’re getting from search results.

Both of these tools multiply the volume, cost-per-click, and ranking of each keyword to get their Traffic Cost value. It’s not guaranteed to be a completely accurate number, but from a high level it lets you approximate the organic value of one or more sites against each other.

You can do this same thing manually using a few other alternative tools, but if you’re looking for speed this is the way to go.

Action items:

- Go to Ahrefs.com or SEMrush.com and use the paid tool or trial

- Enter the website you want to review then use the organic research tab to get a Traffic Cost number

- Repeat this for your competitors to easily compare organic traffic revenue strength and opportunities

Further Reading

- How To Increase Website Traffic By 250,000+ Monthly Visits

- How to Use SEMrush for Competitive Keyword Research

What’s Next?

If your site passes the bar on all of these 10 checklist items, you’re sitting better than 80% of websites.

Technical SEO means different things to different people.

A small startup SaaS site with 10 pages can do the basics while it focuses on growth hacking and ad spending. Massive, multi-million-page sites with new data refreshes daily and heavy competition needs a whole team monitoring and updating technical SEO issues weekly.

Depending on what problems you’re trying to solve, use the above resources to dig in further and prioritize your problems for the next quarter.

Looking for hands-on technical SEO consulting? We can help you with that.

Update Log

- July 16, 2024: Fixed grammatical issues and other clarifications added.

- Nov 23, 2023: Updated throughout

- 2020: Released as this blog post

- 2017: First created as PDF

Leave a Reply