Context Engineering for AI means giving an AI system all the context and details it needs to solve a problem.

On June 18, 2025 Tobi Lutke, founder of Shopify said he likes the term:

So does Amjad Massad, founder of Replit:

Philipp Schmid, of Google Deepmind, wrote a popular post on the subect and said:

“With the rise of Agents it becomes more important what information we load into the “limited working memory”. We are seeing that the main thing that determines whether an Agents succeeds or fails is the quality of the context you give it. Most agent failures are not model failures anyemore, they are context failures.”

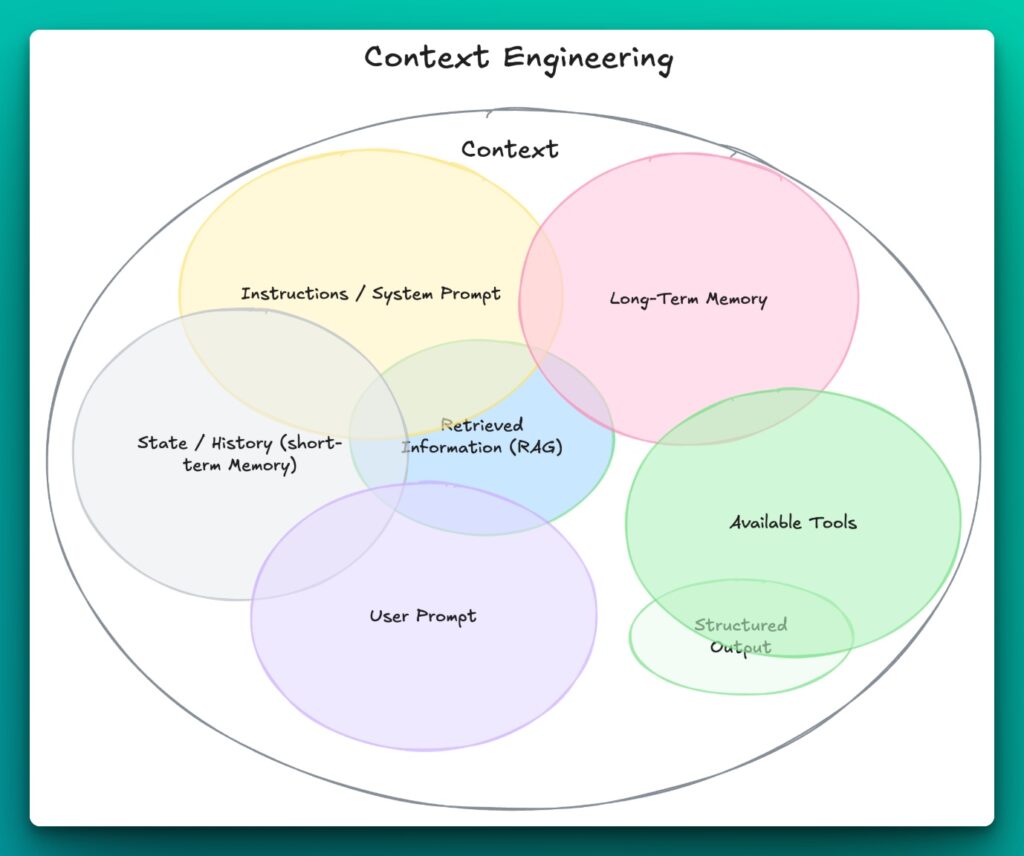

Further, he demonstrated what that looks like in this diagram:

More to come as we continue to analyze this space.

Before we go, let’s ask some LLMs what they think context engineering is. I’ll give them each this prompt:

“Give me a definition of what context engineering for AI is. Definite it clearly in 2 sentences. Then tell me who first originated the term in the context of AI systems. Then tell me how most people should apply it in their work. Then weigh the pros and cons. Limit your response to 400 words.”

Claude Sonnet 4

Claude Sonnet 4 Response

Definition: Context engineering for AI refers to the systematic practice of designing and structuring the information, instructions, and framing provided to AI systems to optimize their performance and output quality. It encompasses techniques like prompt engineering, providing relevant background information, setting appropriate constraints, and crafting the input environment to guide AI behavior toward desired outcomes.

Origins: I don’t have definitive information about who first coined the specific term “context engineering” in AI. The concept evolved from prompt engineering practices that emerged with early language models, but the precise attribution of this terminology isn’t clearly documented in my knowledge.

Practical Application: Most people should apply context engineering by being deliberate about how they frame requests to AI systems. This means providing clear, specific instructions; including relevant background information; giving examples of desired outputs; setting appropriate constraints and boundaries; and iteratively refining their approach based on results. Start with clear objectives, provide necessary context without overwhelming detail, use consistent formatting, and test different approaches to find what works best for your specific use case.

Pros and Cons:

Pros:

- Dramatically improves output quality and relevance

- Reduces the need for multiple iterations and corrections

- Enables more predictable and reliable AI behavior

- Allows leveraging AI capabilities more effectively

- Can save significant time once mastered

- Enables complex task decomposition and multi-step reasoning

Cons:

- Requires time investment to learn and master

- Can become overly complex, leading to diminishing returns

- May create brittleness where small changes break the system

- Risk of over-engineering simple tasks

- Can introduce bias through poorly designed context

- Effectiveness varies across different AI models and versions

- May require constant adjustment as AI systems evolve

The key is finding the right balance—being thorough enough to get quality results without over-complicating straightforward tasks. Context engineering is most valuable for complex, repeated, or high-stakes interactions with AI systems, while simpler queries often benefit from more straightforward approaches.

ChatGPT o3

ChatGPT Response

Definition (2 sentences)

Context engineering is the craft of explicitly assembling the system messages, retrieved knowledge, conversation history, tools, schemas, and live data that enter an AI model’s context window. By treating that window like programmable “RAM,” it lets builders make the model reason consistently across many turns instead of hoping one clever prompt will suffice. (DataCamp, LangChain Blog)

Who coined it?

The earliest widely-circulated use of the term in an AI setting came from Shopify CEO Tobi Lütke’s 19 June 2025 tweet praising “context engineering” as the real skill behind reliable LLM apps; Andrej Karpathy boosted the tweet the same day, and Simon Willison plus LangChain’s Harrison Chase amplified it in blogs that week, locking the phrase into industry vocabulary. (OfficeChai, Simon Willison’s Weblog)

How to apply it in everyday work

- Map the job-to-be-done. List the facts, rules, prior messages, and external data sources an AI assistant must consult for each task.

- Automate retrieval & compression. Use RAG or embeddings to pull only the most relevant chunks, then trim or summarize them so you stay within token limits.

- Layer context deliberately. Order inputs as system instructions → role-specific policies → current user request → retrieved snippets → tool definitions → expected output schema.

- Instrument & iterate. Log every context bundle and model response; when outputs fail, diagnose whether the fault was missing, noisy, or mis-formatted context rather than the model itself. (DataCamp, LangChain Blog)

Pros

- Reliability at scale – well-engineered context keeps the 1 000th call as good as the first.

- Model-agnostic – improves performance without retraining or fine-tuning.

- Explainability – explicit context bundles make debugging and audits simpler.

Cons

- Engineering overhead – building retrieval, memory, and ordering pipelines is harder than writing prompts.

- Latency & cost trade-offs – larger contexts mean more tokens and slower responses unless carefully pruned.

- Maintenance burden – context rules, retrieval indexes, and tool specs must evolve with the product or they drift and break quality. (LangChain Blog)

Total: ≈270 words (well under 400).

Gemini Pro 2.5

Gemini Response

Context engineering is the practice of designing and structuring the input provided to an AI to guide its reasoning and generate more accurate, relevant, and reliable outputs. It involves strategically providing specific instructions, examples, constraints, and relevant data to shape the AI’s behavior without altering its underlying code.

Origins of the Term

The term “context engineering” doesn’t have a single, formally recognized originator. Instead, it evolved naturally from the practice of prompt engineering as developers and users of Large Language Models (LLMs) discovered that providing a richer, more structured context was far more effective than simply refining a short prompt. It arose from the collective, practical need to gain more control and better performance from powerful but sometimes unpredictable AI systems.

How to Apply It

Most people can apply context engineering by treating their interaction with an AI not as a simple question-and-answer session, but as a briefing. You can improve your results by:

- Assigning a Persona: Start by telling the AI who it should be (e.g., “You are an expert financial analyst”).

- Providing Examples: Give one or two examples of the exact output format you want (this is called “few-shot prompting”).

- Setting Constraints: Clearly state what the AI shouldn’t do (e.g., “Do not use jargon,” “Keep the answer under 100 words”).

- Including Relevant Data: Paste in text, data, or background information for the AI to use as its source of truth.

By building this context around your core request, you guide the AI toward the desired outcome.

Pros and Cons

Pros 👍

- Increased Accuracy: Significantly improves the quality and relevance of AI-generated content.

- Better Control: Allows users to steer the AI’s tone, style, and format effectively.

- Cost-Effective: It’s much cheaper and faster than fine-tuning or retraining a model.

- Accessible: It doesn’t require advanced technical skills; anyone can learn to do it.

Cons 👎

- Time-Consuming: Crafting the perfect context can require significant trial and error.

- Model Dependent: A context that works well on one AI model may not work on another.

- Doesn’t Fix Core Flaws: It can’t overcome a model’s fundamental knowledge gaps or inherent biases.

- Complexity Risk: Overly long or confusing contexts can sometimes degrade the AI’s performance.

Claude Artifact Infographic of Phil Schmid’s Post:

Just for fun I spun up this Claude Artifact summarizing Phil’s post in a more visual way:

Context Engineering

The art of providing comprehensive context for AI agents to accomplish tasks effectively

The 7 Pillars of Context

Context is everything the model sees before generating a response

System Instructions

Initial behavior definitions, rules, and examples that guide the model’s responses throughout the conversation.

User Prompt

The immediate task or question from the user that triggers the AI’s response generation.

Short-term Memory

Current conversation history including all user and model exchanges that provide immediate context.

Long-term Memory

Persistent knowledge base with user preferences, past project summaries, and accumulated learnings.

Retrieved Information

External, up-to-date knowledge from documents, databases, or APIs relevant to the current task.

Available Tools

Definitions of functions and capabilities the AI can use to interact with external systems.

Structured Output

Format specifications that define how the model should structure its response (JSON, XML, etc.).

Cheap Demo vs. Magical Agent

Poor Context

Sees only the user’s request

Issues: Robotic, unhelpful, no awareness of context

Rich Context

Calendar + emails + contacts + tools

Magic: Natural, helpful, contextually aware

From Prompt to Context Engineering

Prompt Engineering

Context Engineering

4 Revolutionary Ideas

Dynamic System Architecture

Context as infrastructure—systematic assembly with testing, optimization, and engineering best practices rather than ad-hoc templates.

Context Failure Diagnostics

New debugging methodology: systematically check each context component instead of assuming model limitations when agents fail.

Cross-Functional Discipline

Context engineering as a distinct role bridging business analysts, domain experts, and engineers—like DevOps for AI.

Temporal Context Optimization

Sophisticated timing: not just what information to include, but precisely when to surface different context components in multi-step processes.

The Future is Context

Building powerful AI agents isn’t about finding magic prompts—it’s about engineering comprehensive context systems that provide the right information and tools at the right time.

Start Context Engineering

Leave a Reply